Software Assisted Psychedelic Assisted Psychotherapy: Part 2/n

Data Collection and Predictive Technologies

This is an ongoing series exploring the role of technology in psychedelic-assisted therapies. Today we are looking at:

For Psychdeclics, Digital Therapeutics is a better comparison than cannabis.

A Black Mirror Scenario of Data Collection and Predictive Technologies in Psychedelic Assisted Therapy

As we covered in Part 1, the Digital Therapeutics field is fast on the rise. The first video game was approved for ADHD, and many products, including virtual reality and immersive technologies, are in clinical development and some phase of FDA review.

It is common practice to associate the psychedelic field as the next iteration of cannabis. However, this is flawed for many reasons, and we spend a lot of time trying to delineate the differences and similarities between the two sectors.

The Dog and Pony Show in the Canadian public markets is predicated upon the "psychedelics is cannabis 2.0" narrative, and there's no shortage of eye-rolling, exasperation, and cognitive dissonance when discussing "the current state of the market."

In the last week, as a result of being more in touch with the field of Digital Therapeutics, I am starting to think that it is the more appropriate comparison for the psychedelic sector. Here are a few points that reflect this early theory:

Software-as-a-drug is battling a long-standing incumbent in chemistry/ligand-based drugs. Medicine is a conservative field, and the movement towards Evidence-Based Practice, while undoubtedly a good thing, has perhaps made the profession's bias even more conservative to new technologies and approaches. Disdain for EMR serves as evidence against software encroachment in medicine. Similarly, psychedelics are battling a long-standing incumbency of the perceived dangers, illegality, and a reductive medical model.

Unlike conventional pill-based medicines, both psychedelics and DTx require "engagement," interaction, and consistency. Even the coming wave of DTx 2.0 in which algorithms are directly engaging with neural circuits, one still needs to engage with the technology to reap the benefits. Furthermore, as we saw with the Akili product, it is a part of a broader therapeutic strategy and not a stand-alone treatment. The set, setting, preparation, integration, and therapeutic alliance are essential in our field, and we stress that the therapeutic outcomes are a function of psychotherapy, assisted by psychedelics, not merely the psychedelics themselves.

In psychedelics, there are "categories of use." They go by terms like recreational, spiritual, therapeutic, and the range of debate around a policy is matched to these uses (DecrimCA seeks to allow the sale for 'recreational' (adult-use) while Oregon PSI is focused on therapeutic use). In order to be prescription medicine that insurance pays for, it needs to be FDA approved. This is the MAPS' long term strategy of creating psychedelic medicine and leveraging it to amend drug policy. Digital therapeutics has an analogous story whereby companies have designed products that track biometrics, behavior, send reminders, and offer rewards/incentives and claim to have a benefit and sell them to consumers. Still, they have not gone through rigorous clinical trials to prove efficacy. This is the difference between consumer wearables and "digital wellness" on the one hand and "digital therapeutics"—it's kinda like supplements vs. prescription medication.

Data Collection and Predictive Technologies

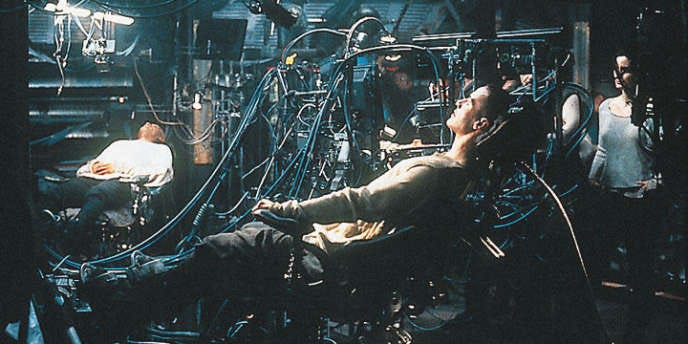

The Black Mirror

To many, the idea of software that interfaces with our neural circuitry in conjunction with psychedelics for the treatment of disease or betterment sounds like the unpublished follow up to Brave New World (or is it the actual plot of BNW? It has been a while.)

What could go wrong?

Black Mirror is a British dystopian science fiction series that explores the unanticipated consequences of new technologies.

It's like a modern Twilight Zone.

A common trope is the weird shit that happens when humans harness the power of computers for enhancing cognition.

The first obvious concern that patients, clinicians, investors, and the psychedelic community must stress is around personal data collection and use.

The type of data that we are talking about (voice and tone inferences, temperature, pupil size, galvanic skin response, heart rate, facial movements, and microexpressions, etc.) when processed through machine learning algorithms resembles makes the conspiracy theorist in me think that this is all a rue by the deep state to leverage psychedelics for societal control.

No, I am not that cynical, just saying.

As a quick aside and as a counter to the above dystopian image, please consider this tweet thread from Balaji Srinivasan. As a self-described Luddite, I find his thinking on technology helpful for challenging my negative biases.

A few weeks ago PsyTech hosted a panel discussion titled The FUTURE of Psychedelics: A Technological Perspective, which lead me down the rabbit hole of perceptual data collection and predictive technologies.

James Stephens of Aurelius Data:

“…really what we focus on is gathering perception data and work on building products and systems that form rituals that actually shape the bias to have a more likely outcome to have a more safe or effectual psychedelic experience... gathering the all the data from everyone's perception and being able to create a bias structure that creates a positive outcome, whether that's for depression, or for not having a bad trip...that's where data and product building and formulation can all come together... to increase the likelihood of a positive experience.”

There are a few ideas in here worth exploring, including "bias structure", but I want to focus on "gathering perception data."

The introduction of technology into therapeutic settings like psychotherapy is where we need to tread most cautiously since the disclosures made in this context are confidential, and we've already seen missteps by teletherapy companies.

From The Verge last year:

“study, published Friday in the journal JAMA Network Open, researchers searched for apps using the keywords “depression” and “smoking cessation.” Then they downloaded the apps and checked to see whether the data put into them was shared by intercepting the app’s traffic. Much of the data the apps shared didn’t immediately identify the user or was even strictly medical. But 33 of the 36 apps shared information that could give advertisers or data analytics companies insights into people’s digital behavior. And a few shared very sensitive information, like health diary entries, self reports about substance use, and usernames.”

Even if a therapist discloses something a patient said, say the confession of a crime, it is not immediately incriminating.

However, if a patient were to disclose this under a technology-enabled setting and it was recorded along with their voice tone, heart rate, galvanic skin response, pupil dilation, etc., this is a very different scenario with very different immediate repercussions.

The introduction of remote monitoring for psychedelic sessions would demand that all safety precautions that are possible be taken. This would include the list of perception data including

voice

voice tone and inflections

temperature

movement

galvanic skin response

heart rate

brain waves (EEG)

There's more, I am sure.

The yin/yang of this data collection is that on the one hand, it helps protect patients, but on the other, it can be used for predicting behavior, which makes many uncomfortable.

Throughout thousands and millions of patients, predictions can be made about outcomes and more challenging things like who is likely to harm themselves or someone else.

"One of the things we are seeing with the rise of machine learning and artificial intelligence is predictive technologies. And we enter this really weird Minority Report State.”

On the one hand, predictive technologies, perhaps first through algorithmic responses to psychometric surveys and eventually through collected biometric data, might predict who will and who will not respond to treatments.

But could such technology also predict who would be likely to resume destructive behavior such as harmful substance use, sexual or physical abuse, suicide, or some other unwanted outcome?

What are clinicians or the companies that enable such insight to do?

This, of course, is a challenge for other domains in psychiatry and mental health but might be unique in psychedelic medicine as the nature of psychedelic experience is perhaps more revealing than conventional therapy, could the openness that comes from psychedelic experience and potentiates healing in one instance create a scenario of self-incrimination where treatment does not take root, and the sources of distress persist?

In short, what do we do with the people who are identified by predictive technologies as being a threat to themselves or others?

Just a quick reminder MAPS is undertaking a huge fundraising effort to bring MDMA to market

Thanks as always for reading and see you on Wednesday.

Zach